Is Seeing Believing? Ensuring Students Are Prepared for a World of Constantly Altered Images and & Videos

Is Seeing Believing? Ensuring Students Are Prepared for a World of Constantly Altered Images and & Videos

by Frank W Baker, media literacy educator

(Submitted 6/20 for august/Sept consideration by MiddleWeb)

The evolution of artificial intelligence (AI) means it is increasingly challenging for educators. You may be asking: what does this mean to me and how can I guarantee my students have the critical thinking and media literacy skills to question what they see.

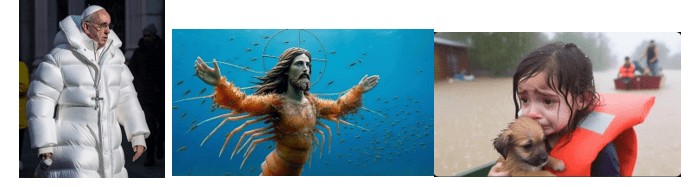

No doubt, you and your students have already been exposed to images that you may or may not realize had been AI produced. How can you tell? Why is this important?

If we don’t prepare students to question the explosion of digitally altered images, they may become more susceptible to believe what they see. Skepticism and discernment become more important than ever.

Since more students are spending more of their time on social media, it may be wise for middle grade educators to consider how to address their new media habit.

A study recently published in the journal MEDIA found that “individuals with low critical thinking skills are significantly more vulnerable to fake news generated by artificial intelligence (AI), especially among young adults.”

Fake news in general refers to news stories or images that are deliberately fabricated or significantly distorted to deceive the reader, often lacking verifiable facts, sources, or quotes. But the meaning of that phrase has changed over time—with many using it when discrediting journalists and media organizations.

The Role of Media Literacy in Confronting AI & Fake Content

If you’re reading this and have taken part in any professional development around media literacy, then you know that critical thinking and critical viewing are at the core. But if you’ve never taken part in a media literacy workshop, then you may not be prepared to help students recognize or combat the “bad actors”.

Examples of the tactics “bad actors” use include:

1. Deepfakes: These are videos and audio deliberately manipulated to fool people

Examples include: using existing video (or sound) of well-known people and altering one or both elements to change the original intent

2. Malinformation: when true information is used but taken out of context for the purpose of causing harm

Examples include: actual photos or video but the description has been changed to fool the viewer

3. Amplifying divisive issues: the deliberate targeting people already in a politically polarized environment

Examples include: bloggers, podcasters and others, who may have a large following, taking advantage of an issue or attempts to make the issue controversial

4. Using Authentic Content to draw in a trusting audience then offering them misinformation

Using Media Literacy Questions and Strategies

One of the first media literacy considerations is “who is the author or producer of the message,” and many times it is impossible to know who created it. Often the message is shared by many on social media without consideration of whether the image is authentic. Ask students to research the source of the image using well regarded applications such as Google Reverse Image Search and TinEye.com

Fact-checking is an important consideration here: many fact checking websites are reliable for verifying whether a news story or image is authentic. Students should become accustomed to using these:

Another important media literacy consideration is: “what techniques does the producer use to make the message attractive or believable”? As an educator, you can help your students become proficient in identifying those ways producers use AI.

- Unusual or Inconsistent Details: AI-generated images often contain minor, noticeable detail errors. Encourage students to look for abnormalities like asymmetrical facial features, odd finger placement, or objects with strange proportions.

- Texture and Pattern Repetition: AI sometimes struggles with complex textures or patterns, leading to noticeable repetition or awkward transitions. Students should look for unnatural patterns in textures like hair, skin, clothing, or background elements.

- Lighting and Shadows: AI-generated images can have inconsistent or unrealistic lighting and shadows. Students should check if the lighting on different objects in the image matches and if the shadows are consistent with the light sources.

- Background Anomalies: Backgrounds in AI images can be a giveaway. Many are overly simplistic, overly complex, or contain elements that don’t belong. Encourage students to pay attention to the background as much as the main subject.

- Facial Features: Faces generated by AI can sometimes appear slightly off. This can include oddities in the eyes (like reflections or iris shape), ears, or hair. These features are often subtly surreal or unnaturally symmetrical/asymmetrical.

- Contextual Errors: AI can struggle with context. An object might be out of place for the setting, or there might be a mismatch in the scale of objects. Encourage students to consider whether everything in the image makes sense contextually.

- Text and Labels: AI often struggles with replicating coherent and contextually accurate text. If there’s text on the image, it can sometimes be jumbled, misspelled, or nonsensical in AI-generated images.

- Digital Artifacts: Look for signs of digital manipulation, like pixelation, strange colour patterns, or blur in areas where it doesn’t logically belong.

- Emotional Inconsistency: AI-generated faces may have expressions that don’t quite match the emotion or mood the image conveys.

Source: https://elearn.eb.com/real-vs-ai-images/

A third media literacy consideration is “what is the purpose of this message and why was it sent”? There are many positive uses of artificial intelligence but when most of what we see is manipulated for purposes of fooling us, it begins to affect the trust we all have.

Students may also be unaware that AI deceptive media are often sent to generate profit. The producers depend on clicks which may mean the audience is being exposed to marketing or advertising.

Want to help your students question AI generated media? I recommend this new short produced by the Columbia Journalism Review. The goal here is get them to recognize those techniques used to produce popular AI generated images.

Recommended readings:

How to identify AI-generated videos online

https://mashable.com/article/how-to-identify-ai-generated-videos

AI slop: Tips from a tech expert on how to spot fake pictures and videos

https://share.google/

Using AI tools for writing can reduce brain activity and critical thinking skills, study finds https://time.com/7295195/ai-chatgpt-google-learning-school/

What Media Literacy Means in the Age of AI

https://www.govtech.com/education/k-12/istelive-25-what-media-literacy-means-in-the-age-of-ai

AI Chatbots: Are They the Next Big Spreaders of Misinformation?

https://opentools.ai/news/ai-chatbots-are-they-the-next-big-spreaders-of-misinformation

AI Literacy Guide: How To Teach It, Plus Resources To Help

https://www.weareteachers.com/ai-literacy-guide/

AI Literacy Lessons for Grades 6–12

https://www.commonsense.org/education/collections/ai-literacy-lessons-for-grades-6-12

Seeing is no longer believing: Artificial Intelligence’s impact on photojournalism https://jsk.stanford.edu/news/seeing-no-longer-believing-artificial-intelligences-impact-photojournalism